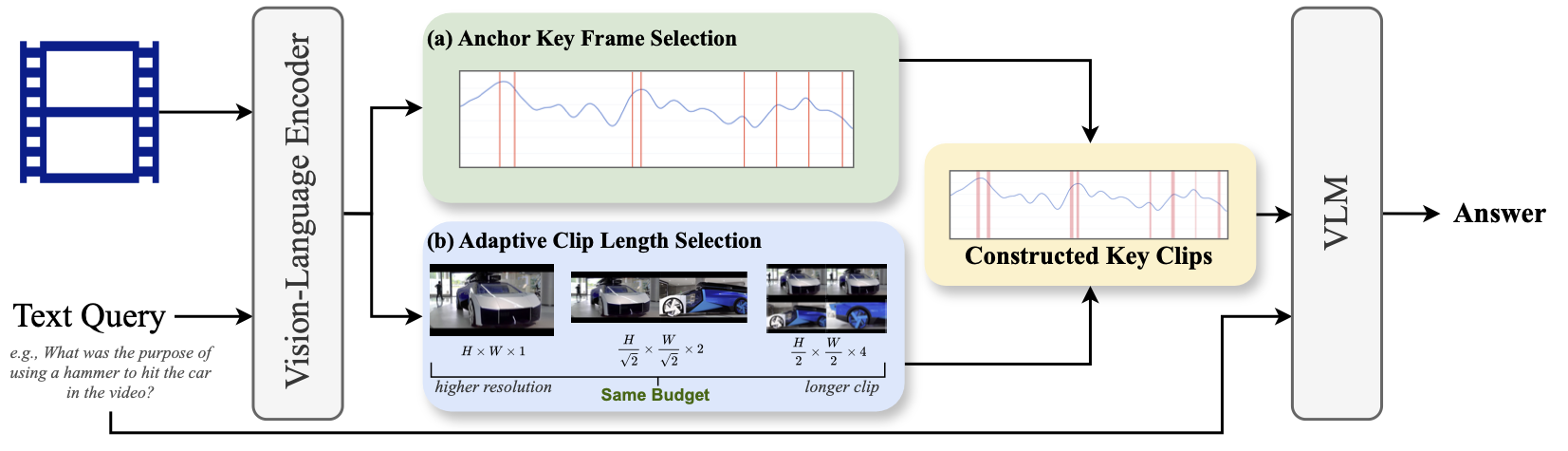

Figure 1: Overview of F2C (From Frames to Clips) method. Instead of selecting isolated key frames, F2C identifies temporally coherent clips that preserve essential motion and event continuity for better video understanding.

Video Large Language Models (VLMs) have achieved remarkable results on a variety of vision language tasks, yet their practical use is limited by the "needle in a haystack" problem: the massive number of visual tokens produced from raw video frames exhausts the model's context window. Existing solutions alleviate this issue by selecting a sparse set of frames, thereby reducing token count, but such frame-wise selection discards essential temporal dynamics, leading to suboptimal reasoning about motion and event continuity.

In this work we systematically explore the impact of temporal information and demonstrate that extending selection from isolated key frames to key clips, which are short, temporally coherent segments, improves video understanding. To maintain a fixed computational budget while accommodating the larger token footprint of clips, we propose an adaptive resolution strategy that dynamically balances spatial resolution and clip length, ensuring a constant token count per video.

Experiments on three long-form video benchmarks demonstrate that our training-free approach, F2C, outperforms uniform sampling up to 8.1%, 5.6%, and 10.3% on Video-MME, LongVideoBench and MLVU benchmarks, respectively. These results highlight the importance of preserving temporal coherence in frame selection and provide a practical pathway for scaling Video LLMs to real world video understanding applications.

@article{sun2025frames,

author = {Sun, Guangyu and Singhal, Archit and Uzkent, Burak and Shah, Mubarak and Chen, Chen and Kessler, Garin},

title = {From Frames to Clips: Efficient Key Clip Selection for Long-Form Video Understanding},

journal = {arXiv preprint arXiv:2510.02262},

year = {2025},

note = {Work done during an internship at Amazon Prime Video}

}